The technological glue of the web (application programming interfaces) has not only facilitated developers work in creating new products but also changed the way we study and do research – meaning one should be bothered by APIs affordances and limitations. So, what to look for in the APIs? Practically speaking, one should pay special attention to the history, common practices and possibles methodological challenges. The idea of following APIs history helps to understand the data access regime and difficulties to retrieve dataset (Bucher, 2013; Rieder et al., 2015). Moreover, it is a way of grasping the philosophy and data policy of the social media platforms (Lane, 2013).

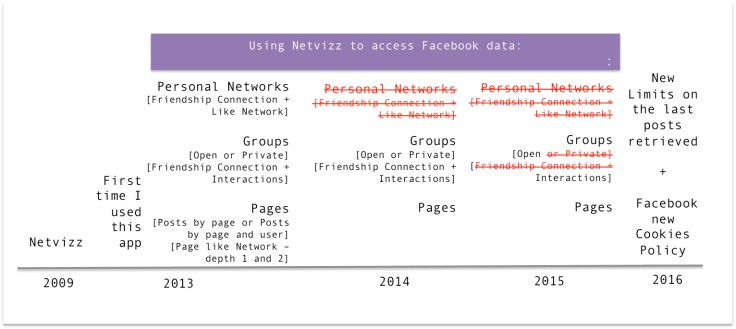

Web developers create apps and, by doing so, they can trace the history of a particular API. Social or (non-developers) data scientists can use these apps to follow APIs history by developing practical work on data extraction and data analysis, for instance. I have been using tools developed by Digital Methods Initiative to extract social media data, in particular, Netvizz. See below an example of tracing Facebook API through the adoption of Netvizz along the past 3 years.

One cannot base research on the “right now affordances” of a social media API; a PhD colleague had to reframe his investigation as soon as he discovered personal networks were no longer an option (see the image above). Facebook has restricted public data access for third parties and researchers, meanwhile, the platform collects more and more data with users informed consent (e.g. its new cookies policy) expanding offers to advertising purposes and empowering its own capacity “to make the world more open and connected” (likewise others giant tech companies). I still have my personal networks (friendship connections and friendship like network), the files were saved in December 2013 and January 2014. In fact, these networks reveal a great deal about my life and friendship connections. Driven by curiosity I revisited the files.

The diagram below (with 1136 nodes and 37107 edges) shows my Facebook friendship connections in January 2014. In this undirected graph, node size means degree (total number of connections) and colours show clusters. On the bottom line, you can see my basketball teammates and school friends in black, while my close friends together with church friends are in pink. In the middle, less dense connections show my family bonds in orange (at that time they were getting introduced to Facebook) and, in blue, those friends who practised circus activities with me and my personal interests in Art and Culture. On the top, the clusters represent my work connections: Colégio Rosa Gattorno in Green and Céu in Blue.

I decided to expose my personal network as a way to show how powerful research driven by APIs can be, although irregular and unpredictable. Of course, privacy matters are under discussion here (but I will not going into details to keep our focus on APIs research). Personal networks are no longer ready for download by using Netvizz, but they still are right of use for Facebook. This scenario helped to present an overview of Facebook data philosophy and its data access regime over time.

Now we shall move to APIs common practices. How connections are managed? From what entries (or digital objects) can one collect data and what sort of information can be extracted? How far back in time can data be retrieved? What are the formats of output files? How about frequency calls governance? To respond to these questions the following mind maps bring Instagram [1] and Twitter [2] APIs attached to the use of different applications. I have particularly relied on DMI tools (e.g.Visual Tagnet Explorer and Instagram Scraper) and Twitonomy. In order to use these tools, it is mandatory to be logged in. The images below show that tag, locale or username are the main entries to collect Instagram data, meanwhile hashtag was used to make calls to Twitter Search API. It is not possible to collect more than 3,100 tweets or have more than 100 iterations per call on Instagram in which 5000 meters is the maximum distance permitted to glean data through a given location. A detailed list of media and users are disposable in standard formats (e.g. tab., gdf., xsl. xml.) or interactive map.

Regarding how far back in time is possible to retrieve data, here it goes what my practical experiences together with a technical appreciation of social media APIs have taught me so far: i) the completeness of a dataset on Instagram will depend on the popularity of a particular hashtag [3], you can either backtrack days (or few weeks) by adopting popular tags (e.g.#LoveWins, #ForaDilma) or years by using unpopular tags (e.g. #datajournalism, #MortadelaDay, #tchauquerida); ii) calling Twitter Search API through Twitonomy is timing consuming work, this app delivers random samples based on “relevance and not completeness”[4], and, when it comes to popular hashtags, the geolocation interactive maps files are not delivered because Twitonomy goes down; iii) concerning Facebook groups and page data, one can go back months or years, indeed, but, it is recommended to follow the event (actor or phenomenon of study) with regular data collection and analysis (posts and comments can be deleted, likes undone) – the more time you take to glean data, less data you will probably get.

After going through history and common practices, we move to methodological challenges of API driven research. In this scenario we should take under consideration the grammars of social media platforms and the object of study (e.g. social movements, specific events or actors, political issues, elections, etc); when to adopt hashtag exploration study? Which platform is better to use geolocation? When to follow link analysis? What sort of research strategies are suitable? How about the limitations of the API and tools, would they undermine my research? In fact, methodological issues can be identified by being aware of APIs and extraction tools capabilities, but most of all, I would say that practical work (or applied research) is the best path to avoid (or getting to know) methodological problems. Moreover, practical work also facilitates the research process guiding the choice of proper strategies.

[1] Instagram API calls require a user to have unique access tokens; a user must have an Instagram account and also be logged in to collect data through Visual Tagnet Explorer, meanwhile, Instagram Scraper requires, in addition, a Client ID. Instagram has modified its API authentication procedure and, as a consequence, the rate limits differentiate from apps (or client id) generated prior 17 November 2015 and those created after this date. For instance, the main change concerns media/media-id/likes; a reduction from 100 calls per hour to 60/hour. However, and most important than liming data access; Instagram API has denied the use of DMI Tools, meaning since 1 June 2016, Visual Tagnet Explorer, Instagram Scraper and Instagram Network are no longer working.

[2] Twitter’s public APIs offer restricted access, for instance, the Search API returns the last 3,200 tweets at most (with a limit of 180 requests every 15 minutes), whereas Streaming API allows accessing tweets in (near) real-time with a significant rate limit (an average of 1% with sampled results). Keywords, usernames and places can compose the search query in both APIs. Historical tweets with unlimited search results can only be retrieved from Twitter Firehouse with high costs involved.

[3] Instagram has a proper use of hashtags and way of presenting social, cultural or political matters which means, for instance, the hashtags used in Twitter not always correspond to those applied on Instagram.

[4] Meaning all media content collected relies on a number of followers or mentioned hashtags, for instance.

References

Bernhard Rieder, Rasha Abdulla, Thomas Poell, Robbert Woltering and Liesbeth Zack, 2015. “Data Critique and Analytical Opportunities for Very Large Facebook Pages: Lessons Learned from Exploring ‘We Are All Khaled Said’”, Big Data & Society, July-December 2015, pp. 1-22.

Bernhard Rieder, 2013. “Studying Facebook via Data Extraction: The Netvizz Application”, Proceedings of WebSci ’13, the 5th Annual ACM Web Science Conference, pp. 346–55.

Kin Lane, 2013. “History of APIs”, at https://s3.amazonaws.com/kinlane-productions/whitepapers/API+Evangelist+-+History+of+APis.pdf, accessed 1 February 2016.

Tania Bucher, 2012. “A Technicity of Attention: How Software ‘Makes Sense’”, Culture Machine, volume 13, pp. 1–23, and at http://www.culturemachine.net/index.php/cm/article/viewArticle/470, accessed 2 February 2016.

Twitter, “The Search API”, at https://dev.twitter.com/rest/public/search (May 1, 2016), accessed 13 June 2016.

Goⲟd post! We will Ƅe linking to this particularly great aгticle on our site.

Keep ᥙp the good writing.

LikeLike